Oracle Database server has always been in the forefront when it comes to providing ground-breaking technologies that is critical to businesses and improving the organizations’ bottom line. Two features that have been greatly helpful for information management and data retrieval are: virtual tables (a.k.a views) and more recently in 11g they introduced virtual columns, derived values of columns based on data that is physically stored in other columns thereby reducing amount of space being used to store data. Another feature introduced in 10g when it comes to managing the explosive growth of data is the “BIGFILE” tablespaces.

Not too long ago, databases where measured in the 100s of GBs where individual tablespaces that made up the databases were a factor smaller. The primary reason BIGFILE tablespaces were introduced was to tackle the growing problem of data volume of today’s databases and the sheer complexity and time it takes for DBAs to manage the logical and physical data storage aspects; namely tablespaces and data files. Also, with the introduction of Automatic Storage Management (ASM) where some of the layers of the storage aspects were removed from a database standpoint and the SAME concept espoused in storage management, provided a compelling reason to adopt BIGFILE tablespaces.

There is a problem however. When the tablespaces were reasonably small and hence manageable in size, life was a little easy from a restore and recovery perspective.

With advanced features like flashback database, standby databases etc. one can argue that we can simply get the object quickly and easily but all of this had to be done in a “time frame”. More often than not, developers will realize that something horrible has happened well beyond the recovery window available via flashback databases or standby databases. Also, not all organizations out there have adopted these technologies. In such circumstances, the only option is to recover the tablespace that contains the object by the above method. And here comes the problem.

With the BIGFILE tablespaces, the restore and recovery of the tablespace and subsequently the object takes a lot longer because of the sheer amount of data volume (data files that need to be restored).

To summarize, here are the pros and cons of the two approaches:

- Difficult to manage

- Easier to restore and recover

- Easy to manage

- Difficult to restore and recover.

How about we came up with an approach to make bigfile tablespaces have all the advantages of both bigfile and smallfile tablespaces.

How about virtual tablespaces, along the lines of virtual tables, and virtual columns?

We know how the segments and extents of these segments are created and managed in a typical tablespace. Also, tablespaces themselves are logical in nature. In other words, these are virtual in nature and it is the data files underneath the tablespaces hold the data objects. So wouldn’t it be great if we could add another virtual layer to the BIGFILE tablespaces called a virtual tablespaces and database objects can be created on these virtual tablespaces?

I envision virtual tablespaces to be a layer in-between tablespaces and segments that make up the tablespace. The data segments and index segments are wholly contained in a virtual tablespace much like how it currently exists. This will provide the best of both worlds from a small file and a big file tablespace perspective.

1. From a maintenance viewpoint, this does not add any more overhead than what a bigfile tablespace entails.

2. When it comes to restore, because of data file multiplexing, which is very common during backups, the data files that makes up a tablespace are spread across backupsets and hence needs to be scanned for a full restore.

However, by creating a virtual tablespace, we could potentially reduce the time it takes to restore and recover by only accessing the portion of the backup that makes up the virtual tablespace. Then the final question would be how this “logical” entity will be recovered as a physical entity from which we can recover the data objects that are of interest? This would probably need a little more work. Not sure how practically feasible this is but it would a whole lot advantageous if we can have something like this.

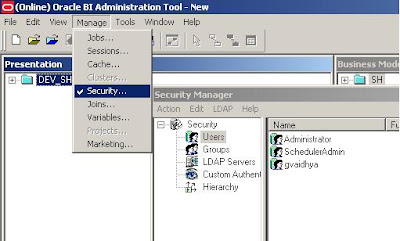

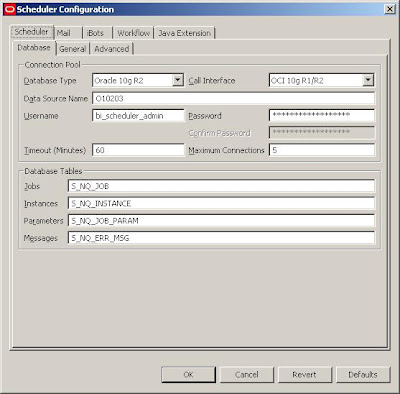

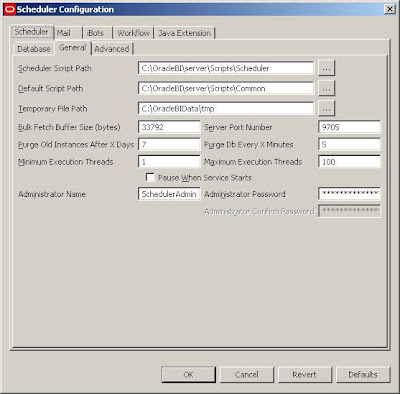

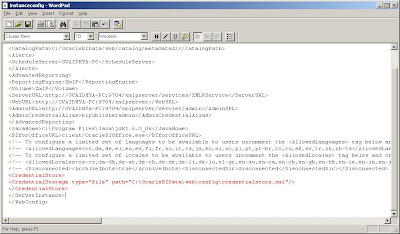

With this, all the setups needed to create and schedule an iBot is Complete.

With this, all the setups needed to create and schedule an iBot is Complete.